1. 部署方式

有几下几种部署方式:

minikube:一个用于快速搭建单节点的kubernetes工具

kubeadm:一个用于快速搭建kubernetes集群的工具

二进制包:从官网上下载每个组件的二进制包,依次去安装

这里我们选用kubeadm方式进行安装

2. 集群规划

Kubernetes有一主多从或多主多从的集群部署方式,这里我们采用一主多从的方式

服务器名称

服务器IP

角色

CPU(最低要求)

内存(最低要求)

k8s-master

192.168.23.160

master

2核

2G

k8s-node1

192.168.23.161

node

2核

2G

k8s-node2

192.168.23.162

node

2核

2G

3. docker安装

这里需要安装与Kubernetes兼容的docker版本,参考链接:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.23.md

https://github.com/kubernetes/kubernetes/blob/v1.23.6/build/dependencies.yaml

containerd也需要和Docker兼容,参考链接:

https://docs.docker.com/engine/release-notes/

https://github.com/moby/moby/blob/v20.10.7/vendor.conf

所以这里Docker安装20.10.7版本,containerd安装1.4.6版本

Docker的安装参考centos7基于yum repository方式安装docker和卸载docker

4. 安装k8s集群

4.1 基础环境

1. 禁用selinux

临时禁用方法

[root@k8s-master ~]# setenforce 0永久禁用方法。需重启服务器

[root@k8s-master ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config2. 关闭swap

swap分区指的是虚拟内存分区,它的作用是在物理内存使用完之后,将磁盘空间虚拟成内存来使用。但是会对系统性能产生影响。所以这里需要关闭。如果不能关闭,则在需要修改集群的配置参数

临时关闭方法

[root@k8s-master ~]# swapoff -a 永久关闭方法。需重启服务器

[root@k8s-master ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab3. bridged网桥设置

为了让服务器的iptables能发现bridged traffic,需要添加网桥过滤和地址转发功能

新建modules-load.d/k8s.conf文件

[root@k8s-master ~]# cat overlay新建sysctl.d/k8s.conf文件

[root@k8s-master ~]# cat net.bridge.bridge-nf-call-ip6tables = 1加载配置文件

[root@k8s-master ~]# sysctl --system加载br_netfilter网桥过滤模块,和加载网络虚拟化技术模块

[root@k8s-master ~]# modprobe br_netfilter检验网桥过滤模块是否加载成功

[root@k8s-master ~]# lsmod | grep -e br_netfilter -e overlay3.4 配置IPVS

service有基于iptables和基于ipvs两种代理模型。基于ipvs的性能要高一些。需要手动载入才能使用ipvs模块

安装ipset和ipvsadm

[root@k8s-master ~]# yum install ipset ipvsadm新建脚本文件/etc/sysconfig/modules/ipvs.modules,内容如下

[root@k8s-master ~] #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF [root@k8s-master ~] [root@k8s-master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules检验模块是否加载成功

[root@k8s-master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv44.2 安装kubelet、kubeadm、kubectl

添加yum源

[root@k8s-master ~]# cat [kubernetes]安装,然后启动kubelet

[root@k8s-master ~]# yum install -y --setopt=obsoletes=0 kubelet-1.23.6 kubeadm-1.23.6 kubectl-1.23.6说明如下:

obsoletes等于1表示更新旧的rpm包的同时会删除旧包,0表示更新旧的rpm包不会删除旧包

kubelet启动后,可以用命令journalctl -f -u kubelet查看kubelet更详细的日志

kubelet默认使用systemd作为cgroup driver

启动后,kubelet现在每隔几秒就会重启,因为它陷入了一个等待kubeadm指令的死循环

4.3 下载各个机器需要的镜像

查看集群所需镜像的版本

[root@k8s-master ~]# kubeadm config images list编辑镜像下载文件images.sh,然后执行。其中node节点只需要kube-proxy和pause

[root@k8s-master ~]# tee ./images.sh #!/bin/bash

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

4.4 初始化主节点(只在master节点执行)

[root@k8s-master ~]# kubeadm init

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

说明:

可以使用参数--v=6或--v=10等查看详细的日志

所有参数的网络不能重叠。比如192.168.2.x和192.168.3.x是重叠的

另一种kubeadm init的方法

# 打印默认的配置信息如果init失败,使用如下命令进行回退

[root@k8s-master ~]# kubeadm reset -f4.5 设置.kube/config(只在master执行)

[root@k8s-master ~]# mkdir -p $HOME/.kubekubectl会读取该配置文件

4.6 安装网络插件flannel(只在master执行)

插件使用的是DaemonSet的控制器,会在每个节点都运行

根据github上的README.md当前说明,这个是支持Kubenetes1.17+的

如果因为镜像下载导致部署出错。可以先替换yaml文件内的image源为国内的镜像源

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml会下载rancher/mirrored-flannelcni-flannel:v0.17.0和rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.1这两个镜像

此时查看master的状态

[root@k8s-master ~]#

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

4.7 加入node节点(只在node执行)

由上面的kubeadm init成功后的结果得来的

[root@k8s-node1 ~]# kubeadm join k8s-master:6443 --token 0qc9py.n6az0o2jy1tryg2b 令牌有效期24小时,可以在master节点生成新令牌命令

[root@k8s-master ~]# kubeadm token create --print-join-command然后在master通过命令watch -n 3 kubectl get pods -A和kubectl get nodes查看状态

4.7.1 node节点可以执行kubectl命令方法

在master节点上将$HOME/.kube复制到node节点的$HOME目录下

[root@k8s-master ~]# 5. 部署dashboard(只在master执行)

Kubernetes官方可视化界面:https://github.com/kubernetes/dashboard

5.1 部署

dashboard和kubernetes的版本对应关系,参考:https://github.com/kubernetes/dashboard/blob/v2.5.1/go.mod

[root@k8s-master ~]# kubectl apply -f https: namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created [root@k8s-master ~]# 会下载kubernetesui/dashboard:v2.5.1、kubernetesui/metrics-scraper:v1.0.7两个镜像

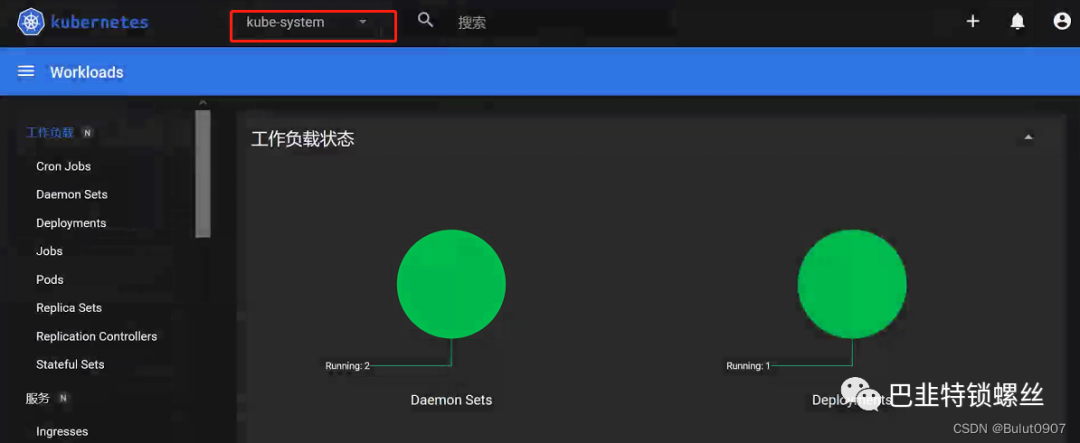

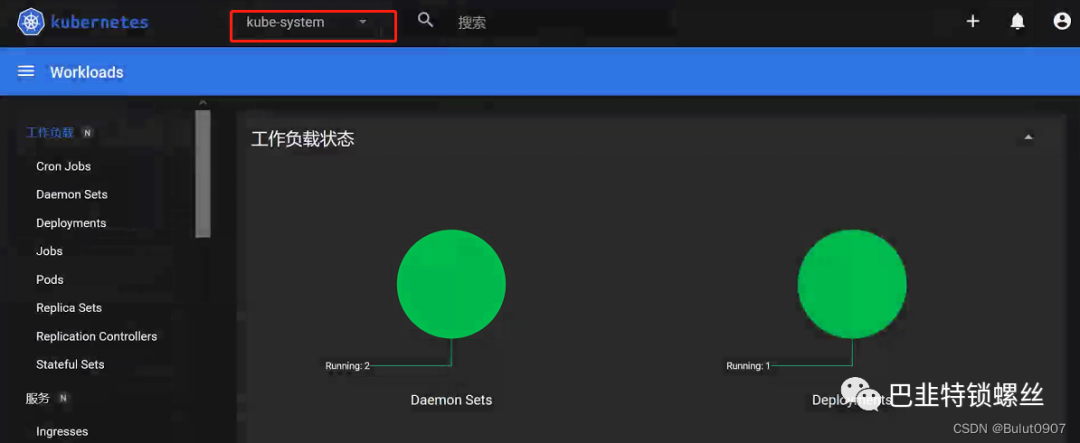

在master通过命令watch -n 3 kubectl get pods -A查看状态

5.2 设置访问端口

将type: ClusterIP改为:type: NodePort

[root@k8s-master ~]# kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard查看端口命令

[root@k8s-master ~]# kubectl get svc -A | grep kubernetes-dashboard访问dashborad页面:https://k8s-node1:32314,如下所示

5.3 创建访问账号

创建资源文件,然后应用

[root@k8s-master ~]# tee ./dash.yaml apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard EOF [root@k8s-master ~]# [root@k8s-master ~]# kubectl apply -f dash.yaml serviceaccount/admin-user created clusterrolebinding.rbac.authorization.k8s.io/admin-user created [root@k8s-master ~]# 5.4 获取访问令牌

[root@k8s-master ~]# kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"令牌为:eyJhbGci…gMZ0RqeQ

将令牌复制到登录页面,进行登录即可

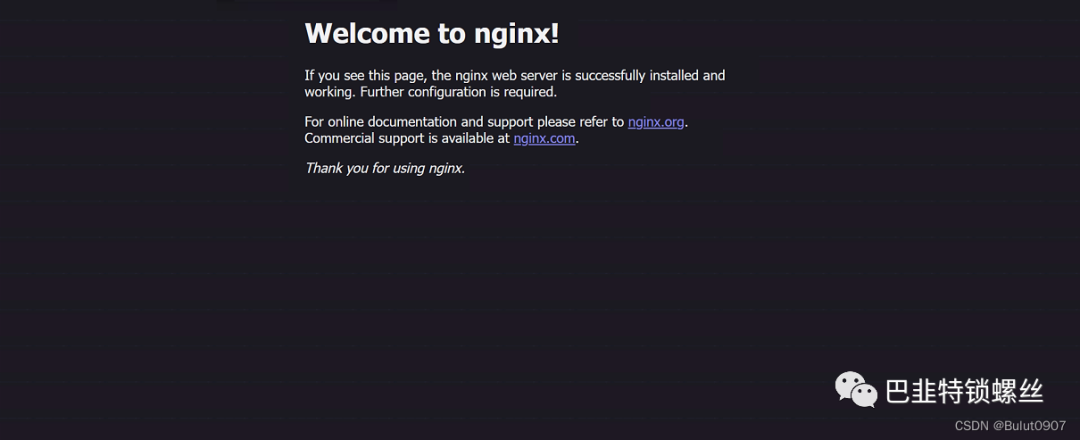

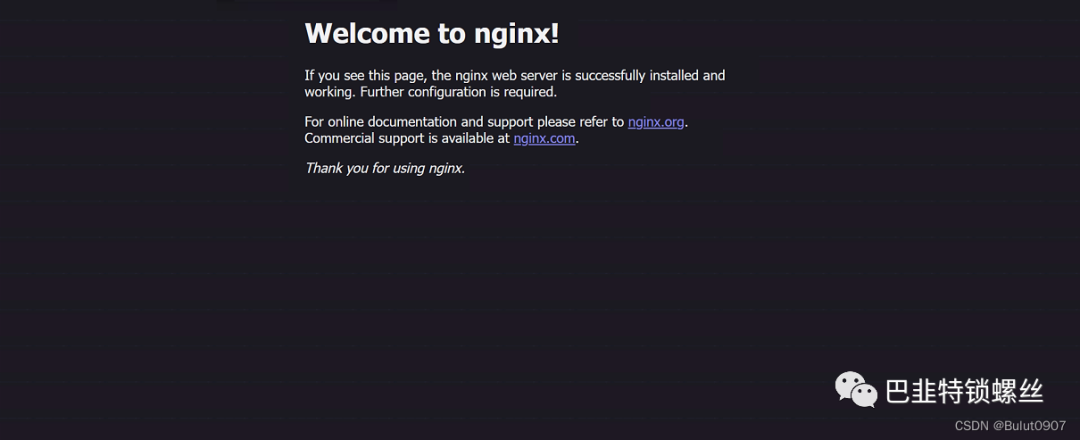

6. 安装nginx进行测试

部署

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx在master通过命令watch -n 3 kubectl get pods -A查看状态

暴露端口

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort查看端口

[root@k8s-master ~]# kubectl get pods,svc访问nginx页面:http://k8s-node1:30523

7. 其它可选模块部署

7.1 metrics-server安装

metrics-server的介绍和安装,请参考这篇博客的kubernetes-sigs/metrics-server的介绍和安装部分

7.2 IPVS的开启

IPVS的开启,请参考这篇博客的ipvs的开启部分

7.3 ingress-nginx的安装

ingress-nginx Controller的安装,请参考这篇博客的ingress-nginx Controller安装部分

7.4 搭建NFS服务器

搭建NFS服务器,请参考这篇博客的搭建NFS服务器部分

版权声明:本文内容始发于CSDN>作者: Bulut0907,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。 本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行可。 始发链接:https: 在此特别鸣谢原作者的创作。 此篇文章的所有版权归原作者所有,商业转载建议请联系原作者,非商业转载请注明出处